Background

AI is receiving a lot of attention across media, commercial, technical and legal circles.

For businesses who intend to procure AI services, a basic understanding of where we are legally and regulatory-wise may help with some of the more strategic procurement and product development planning, as well as being central to any risk assessments.

AI is just one part of the digital revolution that flexes legal and regulatory brains. Technological advances are progressing at an exponential rate; things are moving so quickly that the much slower regulatory machine is struggling to keep up. That said, the UK government has identified “digital” as a cornerstone of global and national policy, recognising that the advent of digital (including AI) presents great opportunities but also presents great challenges (cyber security is another hot topic!)

AI law

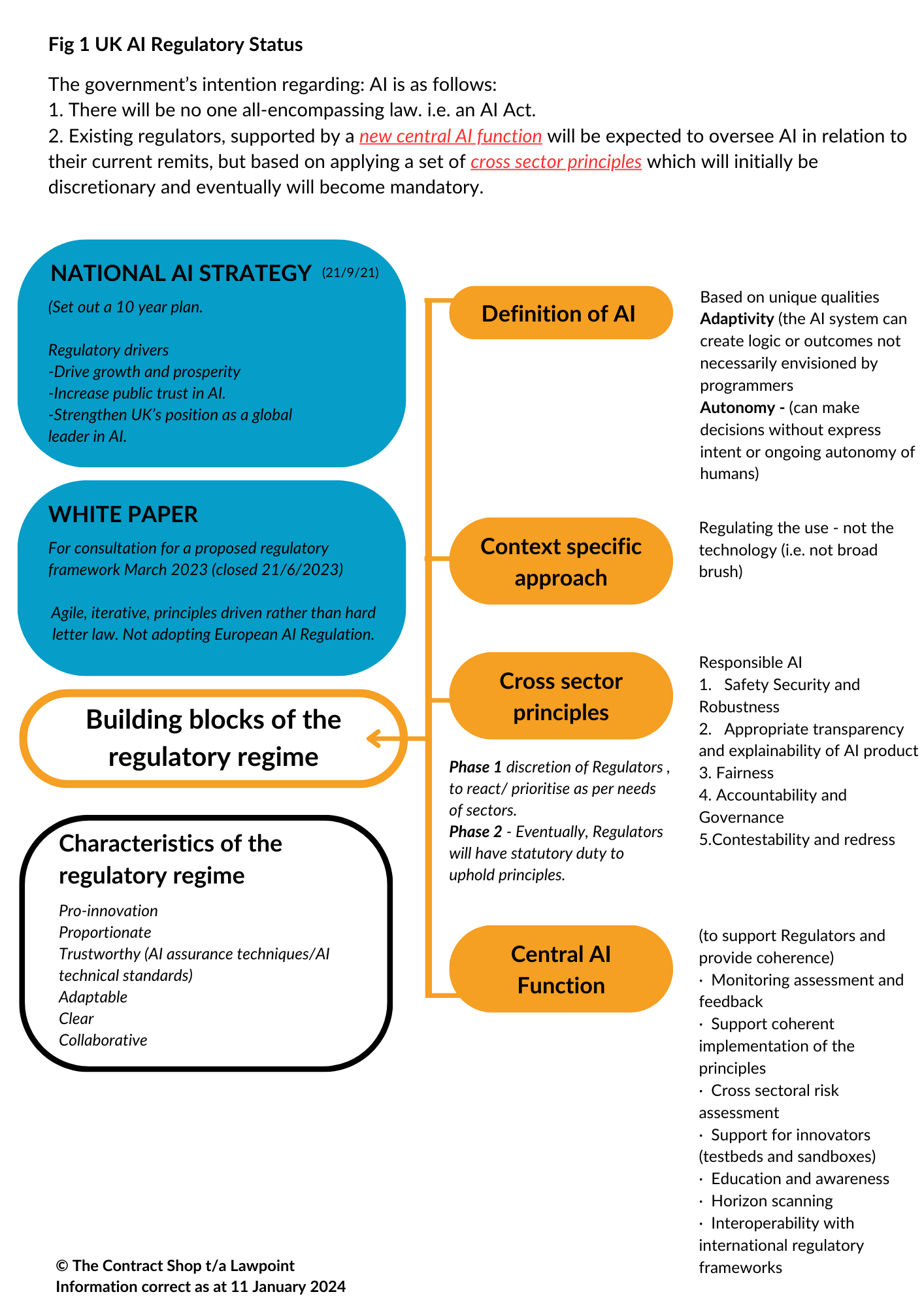

Europe is close to finalising an AI Act, the first of its kind, but the UK government has already stated it does not intend to follow suit, preferring to focus on a more flexible regulatory regime.

Its driver is to adopt a pro-innovation approach to regulation that it hopes will position the UK as a global leader in AI, where it is trying to balance regulation with promoting ethical innovation.

Accordingly, in a White Paper issued for consultation in March 2023, the government stated its intention to harness a regulatory regime based on certain characteristics and principles. It does, however, recognise that a pro-innovation approach will need to be balanced with the obvious ethical and public trust issues that come with it.

Where are we now?

As of the date of this article, the government is assessing feedback from the consultation on the White Paper before it announces its final proposals to implement its AI regulatory plans.

What will the UK’s AI regulatory regime look like?

The government plans to empower existing regulators to oversee AI in relation to their current remits but supported by a new central AI function and with a set of cross-sector principles, which will hopefully apply some consistency across the board.

The proposed cross-sector principles are:

- Safety, security and robustness

- Appropriate transparency and explainability of AI products

- Fairness

- Accountability and governance

- Contestability and redress

The regime is intended to be delivered in two phases, the first being a “discretionary” phase to react or prioritise the needs of sectors as appropriate, with a second phase being legally bound to uphold the proposed cross-sector principles.

What does it mean for businesses?

As it is still in its infancy, it will be interesting to see how the principles of AI regulation flow through the supply chain. The Competition and Markets Authority (CMA) has already raised concerns over the potential ramifications of so few players at the upstream end of the AI Foundation Models market (e.g. developers of Large Language Models), which could impact at any level of the downstream supply chain (i.e. any business that deploys AI products/ services either within a B2B value chain or ultimately an end business or consumer user). Cloud supply chains are now so complex, and the industry use of Large Language Models is now so wide that the regulatory headache remains a work in progress.

The government recognises that accountability throughout the AI lifecycle is important and should be allocated to the correct supply chain “actors” regarding standards and ethics. However, more regulatory questions have been posed rather than solutions provided at this stage.

In the same way third-party software products or services are procured to add value to a business’s product or service range, the usual considerations will apply. Some of the key questions a business should ask itself as part of an AI risk assessment are as follows:

1.Does the business have the right to use the AI product/ service (i) for the purpose for which it intends to use it; and (ii) for as long as it intends to use it?

We are already hearing from clients who find they do not have the appropriate rights to embed or use AI applications as they had hoped, so they’ve had to stop using the software. Alternatively, businesses may, in the future, find themselves with an unexpected licence fee (we’ve seen that happen with software, although not AI yet). Of course, a reasonable fee can be passed on to a client (contract allowing!), but we have seen companies presented with a large invoice for historical use. If the correct permissions aren’t in place, it is ultimately going to devalue the product and the business as well as time and cost wastage. So, it’s definitely worth checking the terms of the AI licence at the product development stage, whether it’s open source or not, and in any case, what the terms of use are, especially for commercialisation/ sub-licensing.

2.Are there substitutes that the business can turn to?

The supply side of AI is closely linked to this, which presents a much more fundamental issue regarding the potential lack of substitutes, which can have a wider-reaching impact on markets in general. As mentioned above, the concern of CMA is the disproportionate amount of Foundation Model Developers in ratio to downstream users. It would, therefore, be useful to consider a fallback option.

3.Does the AI element need to be updated and by whom?

This may well depend on the nature of the product/service and how it is accessed. If the core AI software relies on updates from a third party and data, has this been factored in and what is the provenance of the data.

4.Are there any data protection implications?

Following the above, no conversation can be complete without referencing personal data. It is no secret that AI is a data-thirsty beast. Cloud access to AI-related products makes it potentially easier than ever to flow user data back into the jaws of that beast. At the time of writing, we have not checked, but it would be interesting to see if any contractual terms exist that expect access to data as a pre-condition of using AI. If that data is personal data, as we all now know, rules need to be followed!

For businesses building products and services based on AI products or code, understanding the provenance of the rights in the core AI is going to be more critical than ever. Similarly, businesses providing AI products within their supply chain may be asked to give assurances about the provenance of the AI product/ services moving forward.

For any business that operates at an intermediary level, the concept of residual risk is not new. It does not mean it can’t be accepted; it just means that an informed decision to accept and manage needs to be made.

Discover the benefits of Lawpoint 4 Digital Gateway, offering a complimentary digital legal audit for your unique digital product/service mix. Connect with Tracey at tracey@law-point.co.uk or call 01202 729444 to schedule your appointment.

If you haven’t done so already, subscribe to Lawpoint 4 Digital to access pertinent information and updates tailored to your digital legal audit. Take a step towards enhancing your digital presence today>>> click here.

© The Contract Shop t/a Lawpoint

Information correct as at 11 January 2024